Create an MHC-Class I binding predictor in Python

Background

Note: Also see the follow up to this post.

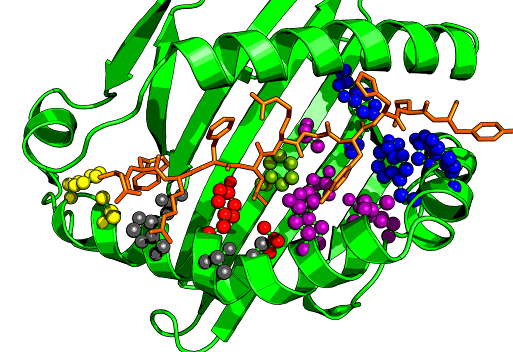

Peptide binding to MHC molecules is the key selection step in the Antigen-presentation pathway. This is essential for T cell immune responses. The ‘epitope’ is the peptide-MHC combination shown in the image at right. Key residues in the MHC contact the peptide and these differ between alleles. The prediction of peptide binding to MHC molecules has been much studied. The problem is simpler for class-I molecules since the binding peptide length is less variable (usually 8-11 but commonly 9). Typically binding predictors are based on training models with experimental binding affinity measurements with known peptide sequences. This data is available from the IEDB for many human alleles. New peptides can then be predicted based on their position specific similarity to the training data.

This requires encoding the peptide amino acid sequence numerically in a manner that captures the properties important for binding. Many possible encodings have been suggested and three are illustrated below.

Peptide encoding

Several encoding techniques have been proposed for representing sequence of amino acids in multidimensional metric spaces. In particular in this work we are interested in a simple encoding that is suited to be coupled with a machine learning algorithm. We will use pandas dataframes to construct the encoding, though probably not the most optimal for speed, it is convenient. First we import the required packages.

import os, sys, math

import numpy as np

import pandas as pd

%matplotlib inline

import matplotlib as mpl

import matplotlib.pyplot as plt

import seaborn as sns

sns.set_context("notebook", font_scale=1.4)

import epitopepredict as ep

Encode peptides

To encode a peptide a few schemes are illustrated here. None of these methods take into account the interdependence of the amino acids in terms of their relative positions.

One hot encoding

The first and simplest is a so-called ‘one-hot encoding’ of the amino acids producing a 20-column vector for each position that only contains a 1 where the letter corresponds to that amino acid.

The code below uses a pandas dataframe to construct the new features. The flatten command at the end re-arranges the 2-D matrix into a 1-D format so it can be used with the regression models. This applies to the other encoding methods also. The show_matrix method draws the 2D matrix as a table.

codes = ['A', 'C', 'D', 'E', 'F', 'G', 'H', 'I', 'K', 'L',

'M', 'N', 'P', 'Q', 'R', 'S', 'T', 'V', 'W', 'Y']

def show_matrix(m):

#display a matrix

cm = sns.light_palette("seagreen", as_cmap=True)

display(m.style.background_gradient(cmap=cm))

def one_hot_encode(seq):

o = list(set(codes) - set(seq))

s = pd.DataFrame(list(seq))

x = pd.DataFrame(np.zeros((len(seq),len(o)),dtype=int),columns=o)

a = s[0].str.get_dummies(sep=',')

a = a.join(x)

a = a.sort_index(axis=1)

show_matrix(a)

e = a.values.flatten()

return e

pep='ALDFEQEMT'

e=one_hot_encode(pep)

| A | C | D | E | F | G | H | I | K | L | M | N | P | Q | R | S | T | V | W | Y | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 2 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 3 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 4 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 5 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 |

| 6 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 7 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 |

| 8 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1 | 0 | 0 | 0 |

NLF encoding

This method of encoding is detailed by Nanni and Lumini in their paper. It takes many physicochemical properties and transforms them using a Fisher Transform (similar to a PCA) creating a smaller set of features that can describe the amino acid just as well. There are 19 transformed features. This is available on the github link below if you want to try it. The result is shown below for our sample peptide ALDFEQEMT.

#read the matrix a csv file on github

nlf = pd.read_csv('https://raw.githubusercontent.com/dmnfarrell/epitopepredict/master/epitopepredict/mhcdata/NLF.csv',index_col=0)

def nlf_encode(seq):

x = pd.DataFrame([nlf[i] for i in seq]).reset_index(drop=True)

show_matrix(x)

e = x.values.flatten()

return e

e=nlf_encode(pep)

| 1 | 2 | 3 | 4 | 5 | 6 | 7 | 8 | 9 | 10 | 11 | 12 | 13 | 14 | 15 | 16 | 17 | 18 | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 0.42 | 2.07 | 0.67 | 0.01 | 1.1 | 0.32 | 0.2 | 0.09 | 0.2 | 0.09 | 0.11 | 0.15 | 0.01 | 0.06 | 0.02 | 0.16 | 0.07 | 0.03 |

| 1 | 1.29 | 1.21 | 0.25 | 0.96 | 0.18 | 0.06 | 0.04 | 0 | 0.09 | 0.26 | 0.18 | 0.05 | 0 | 0.11 | 0.01 | 0.15 | 0.14 | 0.02 |

| 2 | 0.81 | 0.13 | 1.36 | 0.63 | 0.15 | 0.1 | 0.45 | 0.31 | 0.1 | 0.03 | 0.15 | 0.02 | 0.16 | 0.12 | 0.07 | 0.11 | 0.01 | 0.05 |

| 3 | 2.37 | 0.23 | 0.09 | 0.37 | 0.19 | 0.04 | 0.03 | 0.06 | 0.14 | 0.14 | 0.1 | 0.03 | 0.21 | 0.04 | 0.09 | 0.03 | 0.06 | 0.18 |

| 4 | 1.56 | 0.48 | 0.87 | 0.02 | 0.07 | 0.13 | 0.22 | 0.15 | 0.09 | 0.1 | 0.04 | 0.05 | 0.12 | 0.28 | 0.03 | 0.09 | 0.06 | 0.02 |

| 5 | 1.71 | 1.11 | 0.08 | 0.15 | 0.11 | 0.45 | 0.11 | 0.08 | 0.02 | 0.25 | 0.12 | 0.25 | 0.2 | 0.16 | 0.01 | 0.07 | 0.02 | 0.03 |

| 6 | 1.56 | 0.48 | 0.87 | 0.02 | 0.07 | 0.13 | 0.22 | 0.15 | 0.09 | 0.1 | 0.04 | 0.05 | 0.12 | 0.28 | 0.03 | 0.09 | 0.06 | 0.02 |

| 7 | 1.72 | 0.85 | 0.34 | 0.44 | 0.01 | 0.8 | 0.16 | 0.05 | 0.05 | 0.3 | 0.29 | 0.06 | 0.02 | 0.03 | 0.03 | 0.09 | 0.14 | 0 |

| 8 | 0.3 | 0.68 | 0.88 | 0.23 | 0.1 | 0.23 | 0.03 | 0.01 | 0.14 | 0.16 | 0.15 | 0.2 | 0.12 | 0.05 | 0.21 | 0.07 | 0 | 0.04 |

Blosum encoding

BLOSUM62 is a substitution matrix that specifies the similarity of one amino acid to another by means of a score. This score reflects the frequency of substiutions found from studying protein sequence conservation in large databases of related proteins. The number 62 refers to the percentage identity at which sequences are clustered in the analysis. Encoding a peptide this way means we provide the column from the blosum matrix corresponding to the amino acid at each position of the sequence. This produces 21x9 matrix. see https://www.sciencedirect.com/topics/biochemistry-genetics-and-molecular-biology/blosum

The code to produce this array is shown below.

blosum = ep.blosum62

def blosum_encode(seq):

#encode a peptide into blosum features

s=list(seq)

x = pd.DataFrame([blosum[i] for i in seq]).reset_index(drop=True)

show_matrix(x)

e = x.values.flatten()

return e

def random_encode(p):

return [np.random.randint(20) for i in pep]

e=blosum_encode(pep)

| A | R | N | D | C | Q | E | G | H | I | L | K | M | F | P | S | T | W | Y | V | B | Z | X | * | |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| 0 | 4 | -1 | -2 | -2 | 0 | -1 | -1 | 0 | -2 | -1 | -1 | -1 | -1 | -2 | -1 | 1 | 0 | -3 | -2 | 0 | -2 | -1 | 0 | -4 |

| 1 | -1 | -2 | -3 | -4 | -1 | -2 | -3 | -4 | -3 | 2 | 4 | -2 | 2 | 0 | -3 | -2 | -1 | -2 | -1 | 1 | -4 | -3 | -1 | -4 |

| 2 | -2 | -2 | 1 | 6 | -3 | 0 | 2 | -1 | -1 | -3 | -4 | -1 | -3 | -3 | -1 | 0 | -1 | -4 | -3 | -3 | 4 | 1 | -1 | -4 |

| 3 | -2 | -3 | -3 | -3 | -2 | -3 | -3 | -3 | -1 | 0 | 0 | -3 | 0 | 6 | -4 | -2 | -2 | 1 | 3 | -1 | -3 | -3 | -1 | -4 |

| 4 | -1 | 0 | 0 | 2 | -4 | 2 | 5 | -2 | 0 | -3 | -3 | 1 | -2 | -3 | -1 | 0 | -1 | -3 | -2 | -2 | 1 | 4 | -1 | -4 |

| 5 | -1 | 1 | 0 | 0 | -3 | 5 | 2 | -2 | 0 | -3 | -2 | 1 | 0 | -3 | -1 | 0 | -1 | -2 | -1 | -2 | 0 | 3 | -1 | -4 |

| 6 | -1 | 0 | 0 | 2 | -4 | 2 | 5 | -2 | 0 | -3 | -3 | 1 | -2 | -3 | -1 | 0 | -1 | -3 | -2 | -2 | 1 | 4 | -1 | -4 |

| 7 | -1 | -1 | -2 | -3 | -1 | 0 | -2 | -3 | -2 | 1 | 2 | -1 | 5 | 0 | -2 | -1 | -1 | -1 | -1 | 1 | -3 | -1 | -1 | -4 |

| 8 | 0 | -1 | 0 | -1 | -1 | -1 | -1 | -2 | -2 | -1 | -1 | -1 | -1 | -2 | -1 | 1 | 5 | -2 | -2 | 0 | -1 | -1 | 0 | -4 |

Create a regression model to fit encoded features

We are going to create a regression model that can fit the encoded peptide featuers to known affinity data. This model is trained on the known data and then used to predict new peptides. The data used for training is primarily from the IEDB and was curated by the authors of MHCflurry from various sources. A sample of the data is shown below. The affinity measure is usually given as IC50 which is This scale is harder for regression so we linearize the scale using the equation log50k = 1-log(ic50) / log(50000).

df = ep.get_training_set()

display(df[:5])

| allele | peptide | ic50 | measurement_inequality | measurement_type | measurement_source | original_allele | log50k | length | |

|---|---|---|---|---|---|---|---|---|---|

| 0 | BoLA-1*21:01 | AENDTLVVSV | 7817.0 | = | quantitative | Barlow - purified MHC/competitive/fluorescence | BoLA-1*02101 | 0.171512 | 10 |

| 1 | BoLA-1*21:01 | NQFNGGCLLV | 1086.0 | = | quantitative | Barlow - purified MHC/direct/fluorescence | BoLA-1*02101 | 0.353937 | 10 |

| 2 | BoLA-2*08:01 | AAHCIHAEW | 21.0 | = | quantitative | Barlow - purified MHC/direct/fluorescence | BoLA-2*00801 | 0.718615 | 9 |

| 3 | BoLA-2*08:01 | AAKHMSNTY | 1299.0 | = | quantitative | Barlow - purified MHC/direct/fluorescence | BoLA-2*00801 | 0.337385 | 9 |

| 4 | BoLA-2*08:01 | DSYAYMRNGW | 2.0 | = | quantitative | Barlow - purified MHC/direct/fluorescence | BoLA-2*00801 | 0.935937 | 10 |

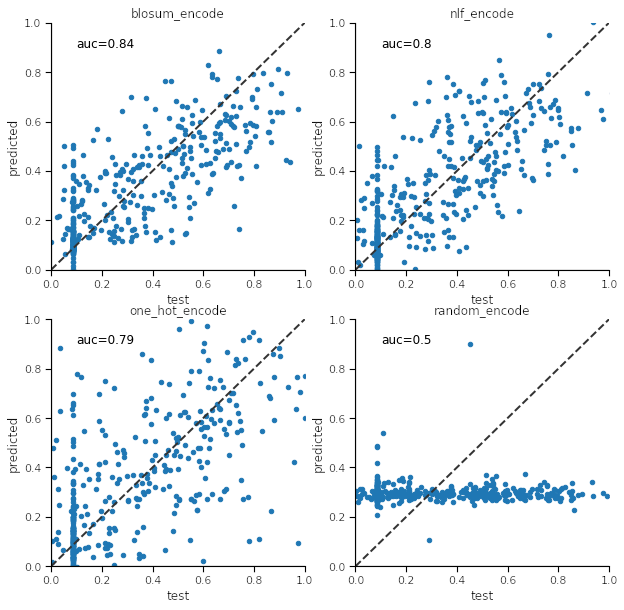

We wish to create a regression model to fit the peptide features against the true affinity values. The code below sets up a train/test scheme for a neural network regressor using scikit-learn. scikit-learn provides many possible models that can be created with relatively little knowledge of machine learning. I chose a neural network here because it seemed most appropriate after some experimentation. The parameters used were arrived at through a grid search which is not shown here as it is somewhat beyond the scope of this article. We then make scatter plots comparing the test to predicted values. The diagonal line is the ideal one to one value.

from sklearn import metrics

from sklearn.model_selection import train_test_split,cross_val_score,ShuffleSplit

from sklearn.neural_network import MLPRegressor

import epitopepredict as ep

def auc_score(true,sc,cutoff=None):

if cutoff!=None:

true = (true<=cutoff).astype(int)

sc = (sc<=cutoff).astype(int)

fpr, tpr, thresholds = metrics.roc_curve(true, sc, pos_label=1)

r = metrics.auc(fpr, tpr)

#print (r)

return r

def test_predictor(allele, encoder, ax):

reg = MLPRegressor(hidden_layer_sizes=(20), alpha=0.01, max_iter=500,

activation='relu', solver='lbfgs', random_state=2)

df = ep.get_training_set(allele, length=9)

#print (len(df))

X = df.peptide.apply(lambda x: pd.Series(encoder(x)),1)

y = df.log50k

X_train, X_test, y_train, y_test = train_test_split(X, y, test_size=0.1)

reg.fit(X_train, y_train)

sc = reg.predict(X_test)

x=pd.DataFrame(np.column_stack([y_test,sc]),columns=['test','predicted'])

x.plot('test','predicted',kind='scatter',s=20,ax=ax)

ax.plot((0,1), (0,1), ls="--", lw=2, c=".2")

ax.set_xlim((0,1)); ax.set_ylim((0,1))

ax.set_title(encoder.__name__)

auc = ep.auc_score(y_test,sc,cutoff=.426)

ax.text(.1,.9,'auc=%s' %round(auc,2))

sns.despine()

sns.set_context('notebook')

encs=[blosum_encode,nlf_encode,one_hot_encode,random_encode]

allele='HLA-A*03:01'

fig,axs=plt.subplots(2,2,figsize=(10,10))

axs=axs.flat

i=0

for enc in encs:

test_predictor(allele,enc,ax=axs[i])

i+=1

Build a predictor

It can be seen from the plots that blosum_encoding gives superior results (highest auc value) for this particular test/train set and allele. Repeating the process generally shows the same outcome. Now that we know how to make encode the peptides and make a model we can do it for any allele. We can write a routine that build the predictor from any training set.

def build_predictor(allele, encoder):

data = ep.get_training_set(allele)

if len(data)<200:

return

from sklearn.neural_network import MLPRegressor

reg = MLPRegressor(hidden_layer_sizes=(20), alpha=0.01, max_iter=500,

activation='relu', solver='lbfgs', random_state=2)

X = data.peptide.apply(lambda x: pd.Series(encoder(x)),1)

y = data.log50k

print (allele, len(X))

reg.fit(X,y)

return reg

Train and save models

Now we can just use the code above for all alleles in which we have training data (>200 samples) and produce a model for each one. Each model is saved to disk for later use so we don’t have to re-train every time we want to predict peptides for that allele. sklearn uses the joblib library to persist models, which is similar to the pickle module.

def get_allele_names():

d = ep.get_training_set(length=9)

a = d.allele.value_counts()

a =a[a>200]

return list(a.index)

al = get_allele_names()

path = 'models'

for a in al:

fname = os.path.join(path, a+'.joblib')

reg = build_predictor(a, blosum_encode)

if reg is not None:

joblib.dump(reg, fname, protocol=2)

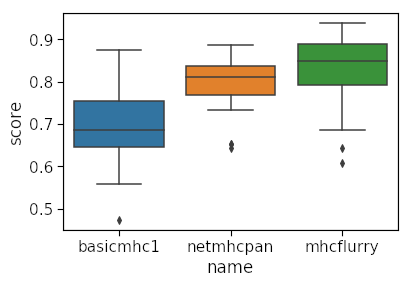

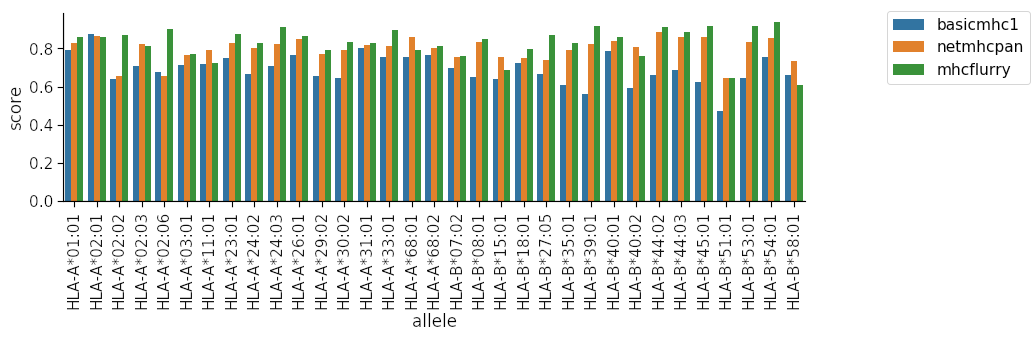

Comparison to other prediction algorithms

The two best MHC-class I prediction tools are currently netMHC/netMHCpan-4.0 and MHCFlurry. It is useful to compare our results to those. In order to do this I have implemented the algorithm we made (which uses the code above) as a predictor in the epitopepredict package. The object is created using P = ep.get_predictor('basicmhc1'). This standardizes the calls to prediction and the results returned so it can be directly compared to the other tools. The code below creates 3 predictor objects and evaluates their performance on some new data for each allele available. The score is then recoreded each time. Importantly, the peptides in the evaluation set are not present in the training set.

Note that predictors all return an ic50 value but internally use the log50k value for fitting. The predictors are evaluated using the roc auc metric with a threshold of 500nM. The auc is a common metric for classiifcation and can be used for regression if a threshold is chosen. Though others measures may be used such as pearson correlation co-efficient.

def evaluate_predictor(P, allele):

data = ep.get_evaluation_set(allele, length=9)

#print (len(data))

P.predict_peptides(list(data.peptide), alleles=allele, cpus=4)

x = P.get_scores(allele)

x = data.merge(x,on='peptide')

auc = auc_score(x.ic50,x.score,cutoff=500)

return auc, data

preds = [ep.get_predictor('basicmhc1'),

ep.get_predictor('netmhcpan',scoring='affinity'),

ep.get_predictor('mhcflurry')]

comp=[]

evalset = ep.get_evaluation_set(length=9)

test_alleles = evalset.allele.unique()

for P in preds:

m=[]

for a in test_alleles:

try:

auc,df = evaluate_predictor(P, a)

m.append((a,auc,len(df)))

except Exception as e:

print (a,e)

pass

m=pd.DataFrame(m,columns=['allele','score','size'])

m['name'] = P.name

comp.append(m)

Results

Finally we use the resultant dataframe to plot the auc scores for each method per allele.

c=pd.concat(comp)

x=pd.pivot_table(c,index=['allele','size'],columns='name',values='score')#.reset_index()

def highlight_max(s):

is_max = s == s.max()

return ['background-color: yellow' if v else '' for v in is_max]

#display(x.style.apply(highlight_max,1))

#print(c)

ax=sns.boxplot(data=c,y='score',x='name')#,hue='allele')

g=sns.catplot(data=c,y='score',x='allele',hue='name',

kind='bar',aspect=3,height=5,legend=False)

plt.legend(bbox_to_anchor=(1.1, 1.05))

plt.setp(g.ax.get_xticklabels(), rotation=90);

plt.tight_layout()

plt.savefig('benchmarks.png')

x.to_csv('benchmarks.csv')

You can see clearly that our method is quite inferior to the other two for many of the alleles! Our model is limited in several ways:

- it only works with 9-mers

- some alleles have preference for different peptide lengths and this is not accounted for

- limited training data for some alleles, the other tools use methods to overcome this

- the neural network is not likely not sophisticated enough

Why the blosum62 encoding is better than the others tested is not entirely clear. However it should be better than one hot encoding since there is information loss when simply using 0 and 1 in a sparse matrix. As mentioned the predictor is available from within the epitopepredict package. It is used as a basic simple model only. To create the basic MHC predictor in epitopepredict we would use the code below. In the future this approach will be used as a basis for improving the predictor. Improvements would include handling different length peptides and changing the regression model.

Using epitopepredict

P = ep.get_predictor('basicmhc1')

from epitopepredict import peptutils

seqs = peptutils.create_random_sequences(10)

df = pd.DataFrame(seqs,columns=['peptide'])

res = P.predict_peptides(df.peptide, alleles=ep.get_preset_alleles('mhc1_supertypes'), cpus=1)

References

- L. Nanni and A. Lumini, “A new encoding technique for peptide classification,” Expert Syst. Appl., vol. 38, no. 4, pp. 3185–3191, 2011.

- V. Jurtz, S. Paul, M. Andreatta, P. Marcatili, B. Peters, and M. Nielsen, “NetMHCpan-4.0: Improved Peptide–MHC Class I Interaction Predictions Integrating Eluted Ligand and Peptide Binding Affinity Data,” J. Immunol., vol. 199, no. 9, 2017.

- T. J. O’Donnell, A. Rubinsteyn, M. Bonsack, A. B. Riemer, U. Laserson, and J. Hammerbacher, “MHCflurry: Open-Source Class I MHC Binding Affinity Prediction,” Cell Syst., vol. 7, no. 1, p. 129–132.e4, 2018.

- J. Hu and Z. Liu, “DeepMHC : Deep Convolutional Neural Networks for High-performance peptide-MHC Binding Affinity Prediction,” bioRxiv, pp. 1–20, 2017.

- https://www.iedb.org/